There’s a common misconception that in order to run machine learning and other algorithms on data, you need to be working within a specific data science platform like TIBCO or Alteryx. While these platforms certainly come with specialized benefits, there are certain use cases where it’s possible, not to mention easier and more cost effective, to do machine learning inside a database. We actually recently hosted a Python and Machine Learning livestream, where Google Engineer and Startup Founder, Joel Wasserman, was able to use a database to build a Python app and run a machine learning model on the data to predict whether it’s safe to go skydiving. I highly recommended checking out Joel’s demo at the link, but in the meantime, let’s explore the theory in question.

Machine Learning / Data Science Platform Requirements

- Visualization

- Deployment

- Performance engineering data preparation

- Data access

- Ability to work online & offline

This article does a great job of summarizing what a good Data Science and Machine-Learning Platform should have. Now, I’d like to reiterate here that my claim is not that databases are the same as a data science specific platform, and both tools have different functionality for different use cases. The argument is that you can do machine learning inside a database, and certain use cases, like quicker or simpler calculations, might be better served by using a database due to the speed, convenience, and cost effectiveness of some systems.

Looking at the requirements above, one could argue that a database can provide most, if not all, of this functionality in some way. Most databases come with some visualization functionality, along with connectors to popular BI and analytics tools for more advanced visualization needs. Databases enable rapid data access, as well the deployment, extraction, and transfer of data to where it needs to go. They enable users to import large amounts of data in real-time and run machine learning models on that data as soon as it enters the database, all while having the flexibility to test, explore, and analyze at the same time. Lastly, most databases in this day and age should have the functionality to enable users to work online or offline, in the cloud or locally, so that folks can have access to their data where and when they need it.

So what are the benefits here?

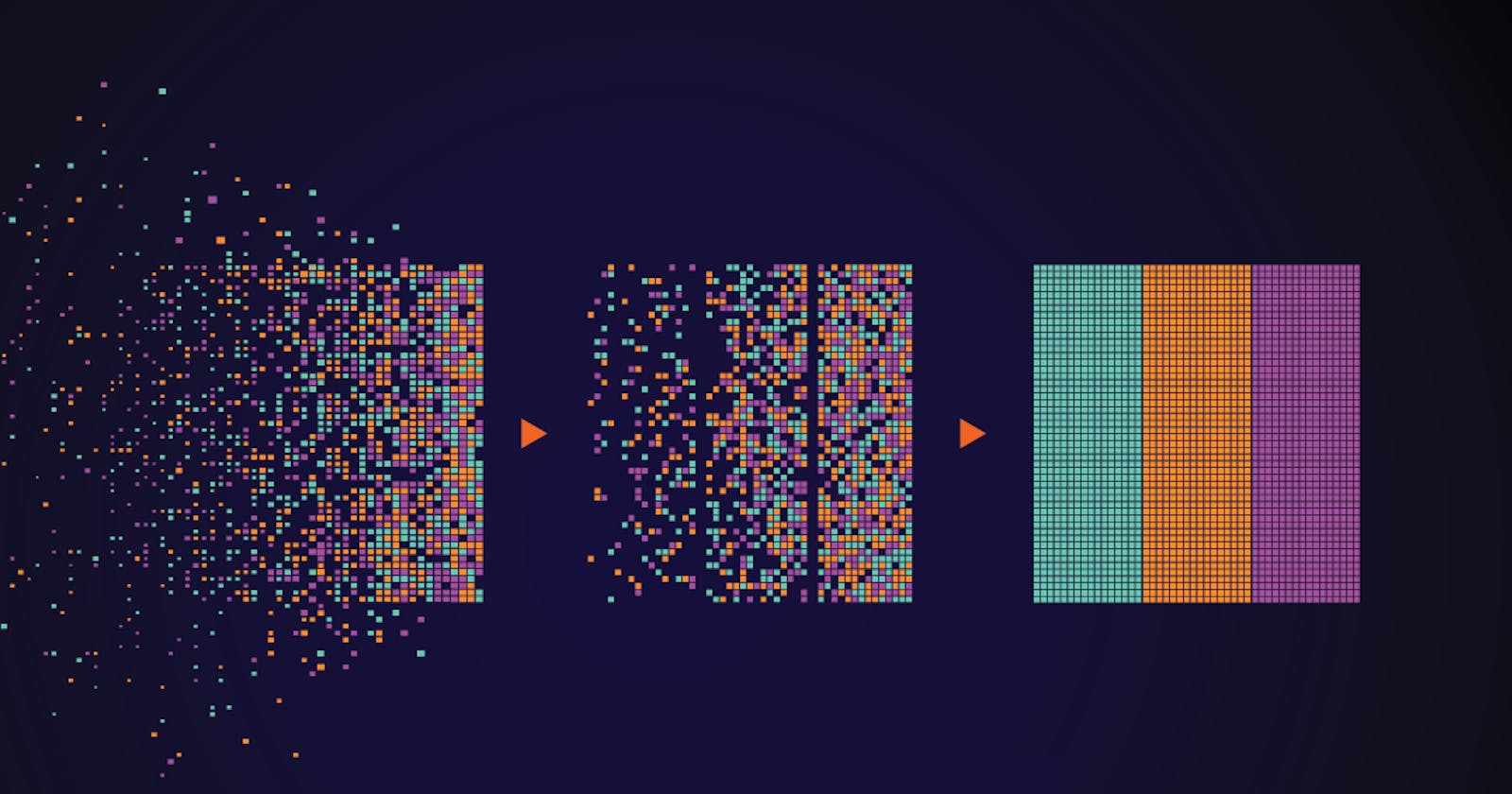

Most projects, from rapid app development to edge computing to integration, require some sort of database tool for the collection, processing, and transfer of data. Data scientists that are using the advanced machine learning specific platforms mentioned above are most likely using a database anyway to feed the data into their analytics tools. But why not just run your algorithms on that data as it enters the database? This might be an opportunity to reduce the number of systems needed, ultimately reducing cost and eliminating integration headaches. The less you have to move and transform your data, the better. By having immediate access to the data in real time, this also enables quicker data prep and more efficient processing. You can train and test models on your data right where it sits, and your work will be production ready instead of having to re-run testing in a different system. All of these benefits can yield a significant time and cost savings, as well as general ease of use.

So, you’re ready to test this theory, which database should you start with?

Here’s the part where I can argue why HarperDB is a better fit for real-time machine learning than other databases. I’ll let others speak for me here. Joel, the Google Engineer who used HarperDB for a Python & ML app, mentioned that HarperDB is incredibly easy to spin up, easy to connect to, and the horizontal scaling is a really nice bonus. He pointed out that HarperDB reflexively updates, so you can add attributes to rows of your data and the entire table will reflexively update, and that’s super convenient. As he ended his demo, Joel mentioned, “I think there’s lots of little perks [within HarperDB], and I haven't even experienced half of them.”

Machine learning often boils down to some pretty quick and simple commands. It isn’t always all that different from other operations you’re doing in a database. Here’s an example from Joel’s demo, where he used the SciKit-learn Python package to train a machine learning model in HarperDB that predicts whether it's safe to go skydiving based on weather reports. It really is a fun application if you want to check it out!

Jaxon, our VP of Product, when asked how HarperDB is a better fit for machine learning over other databases he’s worked with, said the following:

A lot of machine learning applications are based on sensor data - trying to do predictive analytics to figure out if a machine is going to blow up by pulling data from a variety of sensors, external APIs, environmental data, etc. - so you're dumping lots of data into multiple tables at sometimes incredibly high speeds. That’s where HarperDB shines.

HarperDB’s data model allows for fast, simultaneous, reads AND writes.

Let’s take the following hypothetical example:

- Sensor data is being written at 5k records/sec.

- Your model uses the most recent 100k records, and

- Building your model usually takes 20 seconds

HarperDB’s single-model architecture is built to deliver the read and write speeds necessary to rebuild your model in real time*. In the real world, of course, very few models are actually built in 20 seconds, but we designed and built HarperDB so performant reads and writes shouldn’t be a choice- they should be the baseline.

Because of our single-model architecture, HarperDB lets you turn insight into action more quickly- without interim ETL processes, or keeping large data sets in RAM, or worrying about fault-tolerance in case of an error or network issues.

Jaxon continued, “We also support the JSON datatype- data comprised of multiple sensor values that are dumped into one field. HarperDB can query on that nested value, and even join on another table- unlike MongoDB where you end up doing that in the code. This can become a part of your core query, which simplifies things greatly.”

Still curious about how HarperDB is different from other databases on the market?

Our benchmarks are a great place to start, which indicate that we’re much faster than databases like MongoDB and SQLite, while providing the flexibility of both. Jaxon also mentioned that often when using a normal connector model, you find that you have to open or maintain a connection, and then remember to always close it inside the right set of brackets otherwise the connection won't be there when you’re trying to actually pull the data. HarperDB uses an HTTP API, so ultimately you feed operations into an inherently distinct or discrete command, and as soon as that request is over you don't have to close that connection. HarperDB is super easy from a developer in code perspective. You’re probably already making other API requests from your backend using a library like Axios, or from the browser using window.fetch(). Using HarperDB means you don’t need to include yet another dependency just to get data into and out of your database. It fits incredibly naturally when building apps making calls to APIs, this is just another API.

HarperDB can run anywhere; in the cloud, locally, data center, etc., and provides flexibility with NoSQL and SQL functionality while being programming language agnostic. Lastly, HarperDB has an incredibly smooth and intuitive Management Studio / GUI enabling users to install, design, cluster, and manage databases in one interface without writing a line of code. You can insert JSON, CSVs, or via SQL with a simple to use, single endpoint REST API. While Joel was running his Python demo, he mentioned that the CSV drag and drop is super convenient, and you can visualize your data very easily in this well built app (the Studio). He was also surprised by the pure speed of HarperDB when running his machine learning model to test how much each input has an effect on the output, saying “Wow that was really really fast, considering it’s pulling 10,000 items from two different tables, from remote storage, and training a model and using that model to predict different outcomes.” (Joel was using a free HarperDB instance, and I believe the operation he’s referring to completed in under one second). Check out HarperDB to see for yourself, and let us know what you think!

What are your thoughts, do you run machine learning models inside your database? Why or why not?

Obviously, this depends on the size of the sensor data object being inserted, and the complexity of the query attempting to read it, but for sensors writing a few keys’ worth of data per row and a blanket `select ` read, HarperDB delivers.